Science Fiction Becomes Reality

Science Fiction Becomes Reality

Learn how a Moody College professor is helping develop technology that can read your thoughts

Twenty years ago, it might have seemed outrageous to think we’d one day have computers that could read our minds.

However, today — as exciting or scary as it seems — it’s close to becoming a reality, and the technology holds promise for a range of fields from health to national security.

At The University of Texas at Austin, researchers are working with Sandia National Laboratories to explore how new science, known as “brain-computer interface,” where an algorithm can predict our thoughts, can be used to help people who have been rendered paralyzed and have lost all motor function, to communicate. This includes people who have suffered traumatic brain injuries or who have conditions like amyotrophic lateral sclerosis, also known as Lou Gehrig’s disease or ALS.

“A lot of these patients just stay in bed. Some of them can’t even blink their eyes,” said Jun Wang, an associate professor in Moody College’s Department of Speech, Language, and Hearing Sciences who is helping Sandia to refine the new technology.

“If we can enable a level of communication for these patients, they can resume a meaningful life. Because they have lost motor ability, the brain pathway might be the only option.”

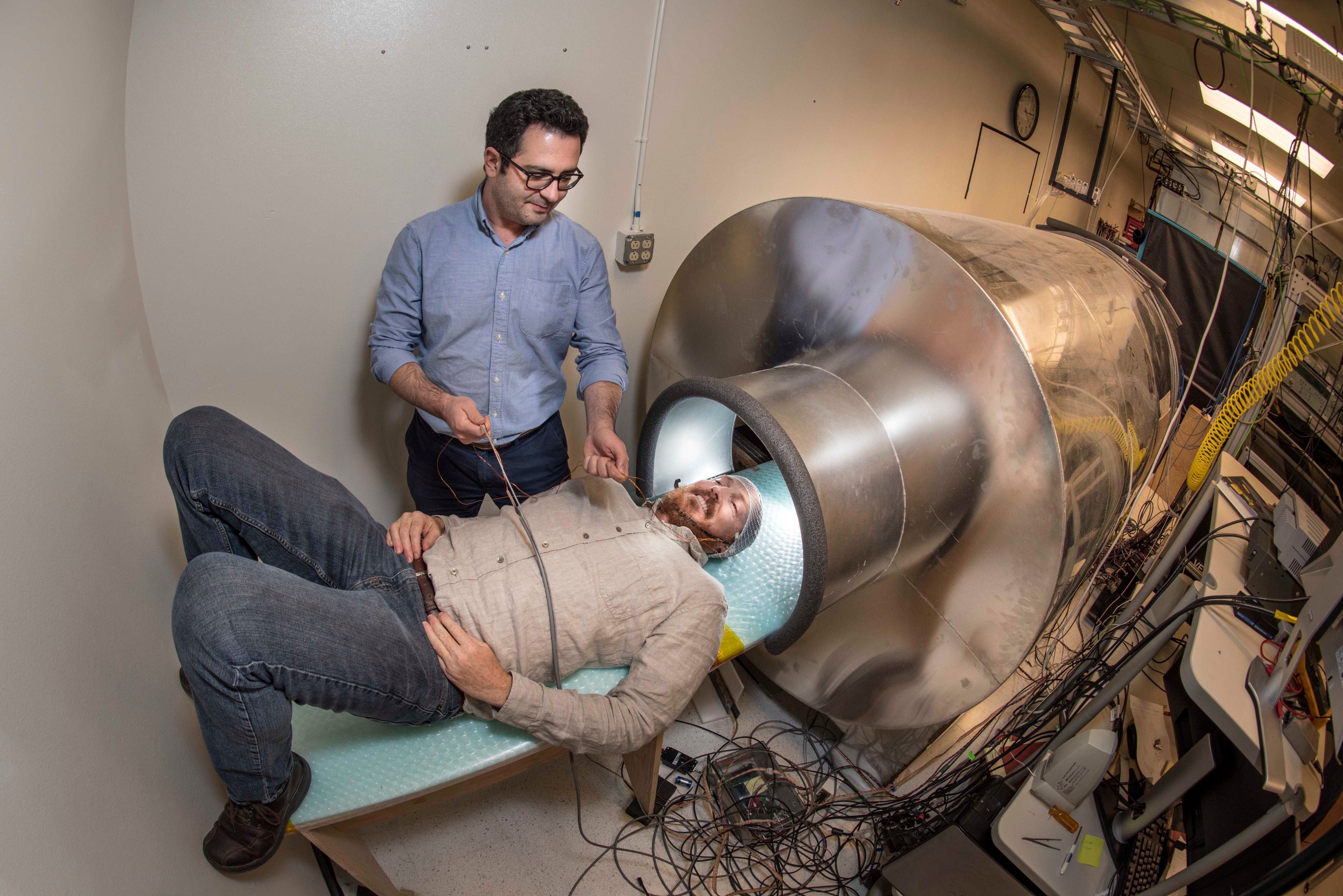

Scientists at Sandia, a research and development laboratory that is part of the U.S. Department of Energy’s National Nuclear Security Administration, have been working for years to develop new sensors that can get a superior signal of the magnetic field around the brain and then decode brain waves into speech. Scientist Amir Borna is the principal investigator on the project, working alongside Sandia scientist Peter Schwindt, and now UT experts.

The ultimate goal is to build a wearable device that can translate magnetic brain waves to language and play it on a computer so patients can communicate with their loved ones.

“A few years ago, we would have thought it was science fiction,” Wang said of the technology. “Now, I think it’s very promising.”

To appreciate how this technology can come to be, it’s important to understand some basic knowledge about the brain and how it operates.

Humans have billions of neurons on the surface of the cerebral cortex. When we feel, speak or think, electrical signals pass from neuron to neuron forming circuits in the brain. The electrical current results in a magnetic field around our scalp.

Just above our heads, this magnetic field reorients in a beautiful and complex dance that changes based on what we say — and also what we think. When we think about a sentence, also known as imagined or covert speech, our brain starts to fire, forming a magnetic field similar to when we talk.

Today, doctors and hospitals are already using magnetoencephalography, or MEG, to measure the brain’s magnetic signals to test for things like the sources of epilepsy and for researchers to study brain development. However, the procedure requires a person to hold still for long periods under a helmet-like dome, which puts a 2 cm gap between the sensors and the brain. This small distance is enough to potentially affect the brain signal enough that brain-speech interface isn’t effective.

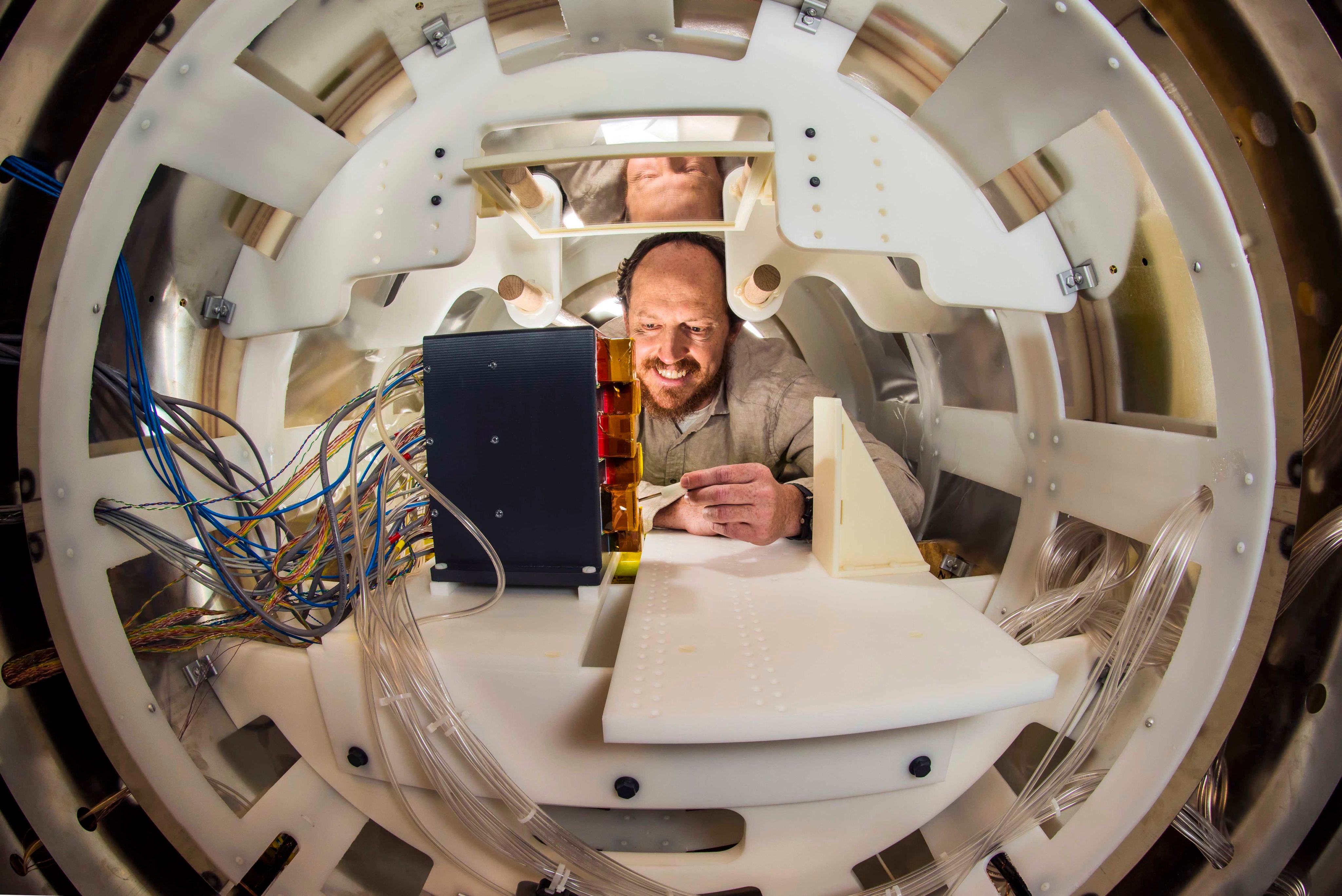

Instead of using superconducting sensors that are fixed into place, Sandia’s prototype uses a sensor technology developed by Schwindt, where quantum sensors are placed directly on a person’s head. This next-generation technology, called OPM-MEG, or optically pumped magnetometer-magnetoencephalography, could change the game in both medicine and research.

In addition to developing the new sensors, Sandia scientists are hoping to tie the various magnetic fields to specific sounds, words and phrases. In other words, when someone says “cat,” the magnetic signal looks one way and when they say “dog” another way.

To figure out the magnetic signals for the tens of thousands of words in the English language is virtually impossible for any one person. That’s where machine learning comes in. If scientists can map just a few words and sounds, they can teach a computer to predict what a person is saying based on that small library of information.

That’s where Wang comes and his team from UT, which includes David Harwath, an assistant professor in the College of Natural Sciences and Calley S. Clifford, an assistant professor in the Department of Neurology at Dell Medical School, come in.

Decoding the Brain

A computer scientist by education, Wang has devoted his career in academia to helping people with speech disorders. It’s his way of using his knowledge to make a difference.

Borna calls him one of today’s leaders in machine learning for neural speech decoding, helping to develop the next generation of AI.

This year, Wang began bringing healthy speakers into a lab at Dell Children’s Medical Center in Austin to begin the process of teaching a computer to translate magnetic signals into speech.

Wang has devoted his career in academia to helping people with speech disorders. Photo by Lizzie Chen

Wang has devoted his career in academia to helping people with speech disorders. Photo by Lizzie Chen

On a Tuesday in July, Speech, Language, and Hearing Sciences Ph.D. student Kristin Jean Teplansky laid on a gigantic white commercial MEG device in a large, magnetically shielded room. Wang had Teplansky speak 400 different sentences of her choosing.

“I want to wash my car.” “Do you want to watch a movie?”

Meanwhile, the machine is mapping the magnetic field around her brain, producing a read-out that’s a lot like an EEG, squiggly black and white lines on a page.

The first step for researchers is to remove the noise from that signal, which occurs from actions like blinking or any background noise. They have to pull the brain signal out from the reading.

Then a computer will begin by mapping the magnetic field for each sound in the English language.

The English language has 44 unique sounds, just like it has 26 letters in the alphabet. These are known as phenomes, and in one of the common ways of writing, they look like this:

/d/ as in doe and deal

/ch/ as in watch and chime

/ōō/, as in moon

Once researchers can decode the magnetic signal for each individual sound, these individual sounds can form any words and sentences. This is known as “open vocabulary decoding.”

To achieve this, the computer needs a lot of data, but by even mapping just a few sounds and sentences, the computer will eventually be able to learn and predict what signals tie to what sounds and make combinations to analyze what exactly we are saying — or thinking.

Ph.D. student Kristin Jean Teplansky is waiting to be wired to the technology. Photo by Lizzie Chen

Ph.D. student Kristin Jean Teplansky is waiting to be wired to the technology. Photo by Lizzie Chen

Testing the Model

But scientists aren’t done once they refine the computer algorithm. Just as important is testing it to make sure it actually works.

Wang and his team will do this by feeding the computer unique examples of sentences that are not part of the “testing data,” or the data scientists provided to train the computer. Then, they can see if the model functions effectively, performing the tasks and translating language as it’s intended to do.

Scientists at Sandia have been doing their own work on brain-computer interface by analyzing the brain signals when people hear specific words — focusing on perceived speech, or what we actually hear, rather than inferred speech.

Through the separate tests, the teams at UT Austin and Sandia are hoping to prove which sensors and which way of mapping speech is more effective. Through the separate tests, the teams at UT Austin and Sandia are hoping to prove which sensors and which way of mapping speech is more effective.

The brain-computer interface project is funded by Sandia right now for an expected three years, but according to Borna, this is just the beginning.

“There are a lot of applications for brain interface,” said Schwindt, who said the knowledge is also being examined for national security purposes related to drones and warfare. “We are only going to see that grow over time as these systems become more effective.”

A device that can read our thoughts and produce speech is not expected to be mass produced just yet.

“I view our role as trying to pioneer in the field to develop new types of sensors and demonstrate what can be done,” Schwindt said. “What we are talking about now is science fiction, in a sense, but to reach that point we have to dream and move slowly toward that dream.”